AI in Hiring: How to Ensure Unbiased Recruitment with Video Interviews

Recruitment has entered an era where artificial intelligence (AI) isn’t just a support function, it’s the driving force behind hiring efficiency, precision, and scalability. From parsing resumes to talent evaluation at scale, AI is now present at the core of talent acquisition systems.

For HR teams, this transformation has brought a leap in speed, scalability, and consistency. AI-driven workflows can review thousands of resumes in minutes, filter top matches, and automate interview scheduling & recording. This helps free up the recruiter’s time to focus on strategic decisions. While AI promises objectivity, it also opens conversations around fairness and accountability.

Algorithms learn from data, and if that data reflects historical or systemic inequalities, AI can unknowingly perpetuate them. For example, models trained on biased datasets might rate candidates differently based on accent, ethnicity, gender, or educational background, replicating the same patterns AI was meant to eliminate.

The answer lies in how responsibly we design, deploy, and monitor AI systems, especially in video interviewing, where perception, language, and human judgment intersect with algorithmic decision-making.

How Video Interviews Use AI

Among the many recruitment functions that have seamlessly integrated AI, video interviewing stands out as one of the most transformative. Video interview platforms use advanced AI capabilities to make every interaction more dynamic, data-driven, and human-like, reshaping how organizations evaluate talent.

Here’s how AI powers the modern video interview process:

a. Structured and Consistent Framework

In an AI-powered video interview, each candidate receives a standardized set of predefined, role-specific questions, carefully crafted based on the competencies required for the position. This helps maintain uniformity across all interviews, similar to how applicant tracking software standardizes resume screening and evaluation workflows.

For example, if you’re hiring a Sales Executive or fintech software developers, every interviewee may be asked questions designed to assess communication, persuasion, and product knowledge. The AI can ensure these questions are presented in the same sequence and with the same time limits, making the process fair and replicable.

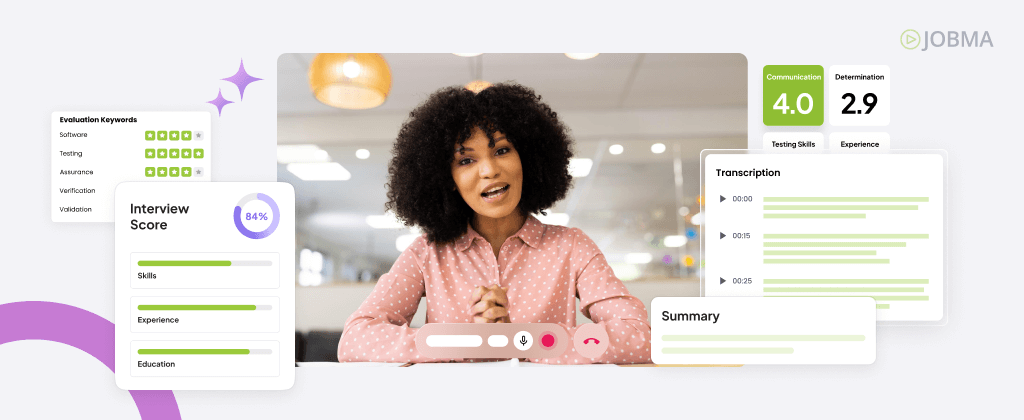

Once candidates submit their video responses, AI models analyze multiple dimensions, such as content relevance, knowledge accuracy, clarity of explanation, and verbal fluency, to generate structured evaluation data. You can then view this analysis to understand how well each candidate aligns with the required competencies.

b. Real-Time Transcription and Multilingual Translation

AI uses advanced speech recognition and natural language processing (NLP) models trained on thousands of hours of multilingual datasets to transcribe every response in real time. Every word a candidate speaks is captured and synced with timestamps, building a verifiable transcript that HR teams can later review, audit, or share for cross-evaluation. This precision ensures that scoring is based on the candidate’s actual response content, not on how fast they spoke, what accent they had, or whether the interviewer took complete notes.

Beyond transcription, AI-powered language translation can automatically translate a candidate’s responses into the recruiter’s preferred language without losing meaning or tone. This feature helps transcend linguistic and geographical barriers, allowing organizations to fairly assess global talent.

In practice, the AI listens to the audio input using automatic speech recognition (ASR) and NLP to convert it into text. It also tags the relevant skills, verbs, and job-specific terms for the recruiter. If a new language is detected, the translation model processes the response into the recruiter’s chosen language while preserving semantics and context. The final transcript is stored alongside the original video, so both the candidate’s real-time response and the AI’s record remain visible for transparency. You can view transcripts directly within the interviewing platform.

Consider a marketing role where candidates from the U.S., Mexico, and Germany participate in one-way video interviews. Each candidate responds in their preferred language: English, Spanish, or German. The AI automatically transcribes each video, translates all responses into English, and applies the same evaluation logic (e.g., clarity, relevance, creativity, and strategic thinking).

c. Dynamic and Adaptive Interaction

Through Natural Language Processing (NLP) and machine learning (ML), video interviewing systems dynamically adapt questions and responses based on how candidates answer in real time. This transforms the interview from a rigid questionnaire into a responsive, conversational experience, helping to humanize AI interactions so the process feels more natural and recruiter-like.

How it Works

- Understanding Candidate Intent: When a candidate responds, NLP algorithms analyze sentence structure and semantic meaning to understand the meaning behind their answer.

- Mapping to Skill Categories: The AI classifies that intent into job-relevant competency areas such as problem-solving, teamwork, or domain knowledge.

- Generating Adaptive Prompts: Based on the skill classification, the AI system selects the next question from a structured question bank. For instance, if a candidate mentions “data modeling” while answering a question about analytics, the AI may follow up with, “Can you describe a dataset you modeled and the outcome?”

- Real-time Learning: The machine learning model evaluates patterns across multiple candidates for the same role. Over time, it refines which follow-up questions produce the most meaningful distinctions between high and low performers.

d. AI-Generated Interview Summaries and Insight

After each video interview, AI doesn’t just store recordings, it transforms them into structured, decision-ready insights. These summaries highlight key decision indicators, such as skill alignment, communication style, and overall role fit, that HR teams can review at a glance. Similar to how a video marketing agency crafts videos to deliver clear, impactful messages, AI distills complex interview interactions into actionable insights, helping recruiters make faster and more informed decisions.

The AI system scores each performance criterion, such as relevance, problem-solving, or domain expertise, based on the weights defined by recruiters beforehand to ensure role-specific consistency. The AI then generates a digestible summary report that captures critical decision factors:

- Key skills demonstrated and how they were evidenced

- Strengths and improvement areas

- Alignment with job competencies

- Recommended percentage score for candidate performance

For example, you conduct a one-way interview for the product manager position. The candidate answers five pre-recorded questions focused on product strategy, stakeholder management, and analytics. The AI identifies phrases like “prioritized roadmap items using RICE framework” and “validated MVP success through A/B testing.” It maps these responses to competencies such as strategic thinking, data-driven decision-making, and stakeholder collaboration. Each response is scored for relevance, clarity, and outcome orientation. The system then generates a comprehensive summary showing that the candidate excelled in analytical thinking and communication, but provided limited depth in technical feasibility. This offers recruiters actionable insight without rewatching the entire video.

In essence, AI transforms video interviews into intelligent hiring experiences. It captures the depth of human dialogue, quantifies it through analytics, and delivers actionable insights, turning a once subjective process into a fast, fair, and consistent part of modern recruitment.

Understanding AI Bias in Hiring

Before addressing fairness, it’s critical to understand how bias enters AI systems. Algorithmic bias can creep in between the layers of the model development lifecycle.

- Training Data: Every AI model learns from data. If that data reflects biased historical patterns, those biases inevitably become part of the algorithm’s decision-making process. For example, imagine a recruitment dataset dominated by resumes of male candidates who previously held senior technical roles. If an AI system is trained on this dataset, it may begin associating “male” indicators with technical competence, even if gender isn’t an explicit variable. Over time, the system might score female candidates lower for similar roles simply because they don’t fit the learned pattern.

Similarly, if past hiring data shows a strong preference for candidates from a handful of elite universities, the AI could inadvertently deprioritize candidates from less-known institutions, even when their skills match or exceed requirements.

- Model Design: Algorithms that weigh certain features (like word choice or tone) may unintentionally penalize specific demographic groups. For instance, an AI resume screening tool might emphasize certain keywords such as “leadership,” “strategy,” or “management.” While this seems neutral, it could unintentionally favor candidates who describe their experience using specific linguistic styles, potentially overlooking equally capable applicants who use different phrasing or come from industries with distinct terminology.

Similarly, if the model architecture gives higher weight to variables like educational background or previous employer size, it might inadvertently correlate “prestige” with “competence.” Such design choices, though unintentional, can affect fairness in candidate ranking.

- Feedback Loops: When biased outcomes reinforce themselves, the AI becomes progressively narrower in judgment. After an AI model goes live, it continues to learn in real time and evolves based on feedback from its own outcomes. This is where feedback loops can reinforce bias if left unchecked.

Suppose an organization’s hiring algorithm consistently recommends a certain “type” of candidate, say, those from specific industries or backgrounds. If recruiters continue hiring from that shortlist, the new data fed back into the system will reflect the same narrow profile. Over time, the algorithm “learns” that these are the right hires, and future candidates outside that pattern are deprioritized. This self-reinforcing cycle makes the AI increasingly narrow-minded.

With the rising adoption of AI, platforms are actively addressing these challenges through bias audits, dataset diversification, and transparency protocols. It helps to detect and correct biased decision patterns before they affect real candidates.

How to Ensure Video Interviews Remain Unbiased

Ensuring fairness in video interviews requires a combination of ethical AI design, continuous oversight, and transparent communication. Here are the most effective strategies:

a. Diverse and Balanced Training Data

AI fairness begins with the data it learns from. When AI models are trained on diverse and balanced datasets, they’re exposed to a wide range of accents, communication styles, age groups, genders, and cultural expressions. This helps the system understand that effective communication or competence can take many forms.

Here’s how it works: during training, large datasets of candidate responses (both audio and video) are fed into the AI model. Each data point is labeled with specific, job-relevant outcomes, for example, clarity of explanation, relevance of answer, or problem-solving approach, rather than subjective traits like tone or appearance. By learning from this diverse data, the AI builds a broader understanding of what “good performance” looks like across multiple demographics and contexts.

b. Data Anonymization

By design, AI systems can include anonymization layers that hide or remove personally identifiable information (PII) such as names, gender markers, photos, or accents during the initial screening phase. This helps ensure that assessments are based entirely on what candidates say and how relevant their responses are to the job – not on who they are.

Here’s how it works: before a candidate’s response is sent for scoring, the AI system masks data points that could reveal identity or demographics. For example, in video-based evaluations, facial detection algorithms can be disabled or replaced with speech-to-text transcripts, ensuring that visual appearance does not influence the assessment outcome.

Imagine evaluating two candidates for a customer support role. One has a regional accent, and the other speaks with a neutral tone. In a traditional interview, unconscious bias might lead the recruiter to favor one over the other. But with anonymization, the AI evaluates both responses only on content quality – such as clarity, relevance, and problem-solving ability – ensuring each candidate is judged purely on skill and communication strength.

This strategy not only helps create a fairer and more merit-based evaluation process but also builds trust among candidates, who know their responses are being reviewed objectively. It shifts hiring decisions from perception-driven judgments to data-backed insights, reinforcing the integrity of AI-assisted interviews.

c. Explainable AI (XAI)

Explainable AI (XAI) ensures that every score, rating, or recommendation generated by an algorithm can be traced back to understandable reasoning. Instead of functioning as a “black box,” XAI systems provide recruiters with clear visibility into how decisions are made, helping them validate, interpret, or even question the outcomes.

When a candidate completes a video interview, the AI doesn’t just assign a numerical score. It breaks down why that score was given. For instance, if a candidate received a lower communication score, XAI can show that it was due to factors like incomplete sentence structures, low relevance to the question, or lack of clarity. This transparency helps recruiters understand the AI’s logic and verify that the evaluation aligns with their hiring expectations. When hiring teams can track why and how a candidate was evaluated, they can detect patterns, refine models, and ensure fairness across hiring decisions.

d. Regular Bias Audits

Ensuring fairness in AI-assisted hiring isn’t a one-time setup, it’s an ongoing process. Regular bias audits are crucial for maintaining transparency, accuracy, and trust in recruitment AI interviewing systems. These audits are conducted both internally and by independent evaluators to identify whether the AI’s decision-making process is consistent and equitable.

AI models are continuously tested with controlled datasets that represent various candidate demographics. Analysts then review whether similar responses from candidates of different backgrounds yield comparable scores. If discrepancies appear, for instance, if the model consistently rates one demographic group higher or lower for the same skill, developers can trace the issue back to the data layer or scoring logic and retrain the system with more balanced inputs.

By conducting these audits regularly, say, every quarter or after major model updates, organizations can ensure that their AI tools continue to make skill-based, unbiased evaluations. This continuous monitoring also helps meet compliance requirements under global data ethics and AI governance frameworks, such as the EU AI Act and EEOC guidelines in the U.S.

e. Human-in-the-Loop Oversight

Even the most advanced AI is meant to enhance human decision-making, not replace it. The concept of human-in-the-loop oversight ensures that every AI-assisted hiring decision is guided by both data and human judgment. This creates a balance where AI provides efficiency and standardization, while human recruiters contribute context, empathy, and intuition.

Here’s how it works:

- AI performs the initial screening and scoring, capturing candidate information on predefined, job-specific parameters such as relevance, clarity, problem-solving, and communication. It suggests standardized scores, so every candidate is measured against the same criteria, reducing inconsistency.

- Recruiters then review anonymized transcripts or video snippets to interpret soft skills and cultural alignment. This ensures that the AI’s quantitative insights are supported by qualitative human evaluation.

- Discrepancies or anomalies trigger human audits. For instance, if two candidates with similar responses receive vastly different scores, a hiring manager can step in to review and, if needed, adjust the evaluation.

This AI-plus-human framework ensures hiring decisions are both data-driven and empathetic. Recruiters gain efficiency from AI’s ability to process thousands of interviews consistently, while still maintaining control over the final judgment.

Compliance Frameworks Supporting Fair and Transparent Hiring

As AI adoption in recruitment expands, governments and regulatory bodies have established standards around fairness, privacy, and transparency. Organizations using AI in hiring are required to stay aligned with these frameworks to maintain both compliance and public trust.

Key Global and U.S. Regulations

Ethical AI Governance

Beyond compliance, organizations are adopting AI ethics charters that promote principles like transparency, accountability, and inclusivity. These include:

- Candidate consent protocols, to ensure that candidates are informed when and how AI is used in the hiring lifecycle.

- Fairness documentation, which includes maintaining records and fairness reports that disclose training data and audit outcomes.

- Independent external audits to certify that AI models meet fairness and security standards.

For hiring software providers like Jobma, adherence to frameworks like SOC 2 Type II and GDPR ensures that all AI-driven decisions remain traceable, ethical, and compliant. These certifications signal to HR leaders that the platform doesn’t just deliver automation, it upholds integrity and accountability in every step of the hiring process.

The Path Forward: From Compliance to Commitment

As AI and video interviewing continue to redefine how organizations attract and evaluate talent, fair hiring in this new age depends on intent and accountability. The goal isn’t to eliminate human judgment, but to elevate it with intelligent, ethical systems.

Compliance frameworks like GDPR, SOC 2, and Local Law 144 set the baseline. True fairness goes beyond legal requirements – it’s about creating systems that consciously counteract bias and empower every candidate with an equal opportunity to succeed.